The personal computer (PC) industry is undergoing a top-down technological revolution with the rapid advancement of artificial intelligence (AI). From chip manufacturers such as Intel, AMD, NVIDIA, and Qualcomm to terminal device manufacturers, the entire industry chain is actively promoting the concept of AI PC. The goal is to integrate hardware and software deeply to provide users with a more intelligent and personalized computing experience.

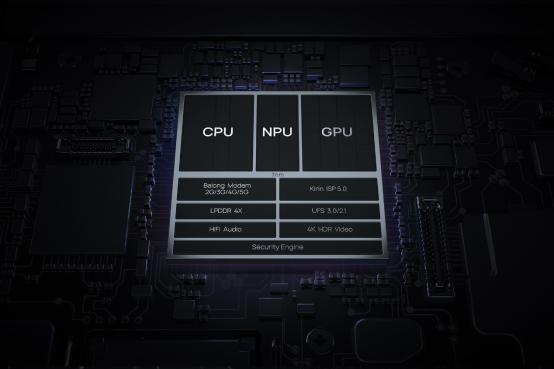

The hardware architecture of AI PC has been innovatively upgraded from traditional PCs. In addition to the CPU and GPU, AI PC have introduced the Neural Processing Unit (NPU), a new component designed to provide more powerful AI computing capabilities. However, a recent test of a certain brand's PC model found that when running large AI models locally, the system did not utilize the NPU's computing power but relied entirely on the GPU for computation. This phenomenon has raised questions about the necessity of NPU in AI PC within the industry.

Development Stages of AI PC

The GPU has a significant advantage in handling large AI models, and many open-source AI models can be deployed on home computers for inference using GPU power. In the initial stage of AI PC development, leveraging GPU computing power is a secure and rapid solution. Zhang Hua, Vice President of Lenovo Group, mentioned at a press conference the five major conditions required for a mature AI PC, including the standard configuration of local hybrid AI computing power with CPU + GPU + NPU, embedded personal large models, localized personal data and privacy protection solutions, an open AI application ecosystem, and embedded personal agents. He also predicted that the development of AI PC can be divided into 3 stages, each marking further integration and innovation of hardware and software.

1. AI Ready Phase (December 2023 to April 2024): The core of this phase is the support of the hardware platform, including the hybrid AI computing power of CPU, GPU, and NPU. This provides a foundational platform for terminal manufacturers and third-party application developers to innovate AI experiences.

2. AI Explore Phase (April 2024 to September 2024): In this phase, the hardware of AI Ready will support more advanced features such as personal agents, personal large model embedding in AI PC, and the first batch of third-party AI applications accessing the AI mini-program platform. The realization of these functions will rely on the hybrid AI computing power of CPU, GPU, and NPU, and provide personal AI assistant services based on natural language interaction.

3. AI Master/AI Advanced Phase (After September 2024): This phase will witness the continuous improvement of hardware hybrid AI computing power, the enhancement of personal large model capabilities, the enrichment of the AI application ecosystem, and the diversification of business models. The scope of personal agent scene services will be further expanded, and the service experience will be upgraded.

Application Prospects

Currently, AI large models generally use cloud inference, mainly because the data volume of large models is too large to be deployed locally. A key difference in the concept of AI PC lies in the application of local large models, which is significantly different from cloud-based large models. The application prospects of AI PC are broad, and their potential is mainly reflected in the following aspects.

● Data Privacy Protection: Local large models, due to their non-reliance on cloud storage, have an inherent advantage in data security, which is particularly important for work scenarios requiring high confidentiality.

● Natural Language Interaction: Users can interact with AI PC through natural language to quickly find information in documents or modify computer settings with voice commands, greatly reducing the difficulty of using the operating system.

● Personalized Database Construction: Users can upload a large number of documents to build a personal database and quickly search for and locate the required information or data through the AI assistant.

● Innovative Interaction Experience: For example, Microsoft's "Copilot+ PC" concept, which combines AI computing power with AI agents, provides a new type of interaction, such as real-time screen information reading, game strategy provision, AI image editing, and generation.

● Recall Feature: The "Recall" feature of AI PC is similar to a "time machine," capable of accessing and recalling any operation performed on the computer, including visited websites and seen images, based on local computing power and storage, ensuring user privacy protection.

Conclusion

Although the concept of AI PC still needs further exploration, the application of AI on PCs requires an increasingly deep integration of hardware and software. As an important component of AI PC, the form of existence, development direction, and application adaptation of NPU will show more possibilities with the advancement of technology and the improvement of market demand. The development of AI PC will not only promote the innovation of hardware technology but also bring broader development space for software applications, providing users with a more intelligent and personalized computing experience.

NVIDIA is a well-known manufacturer of graphics processing units (GPUs) and AI accelerators, with products widely used in personal computers, gaming consoles, professional workstations, and data centers. NVIDIA's A100 and H100 GPUs are high-performance accelerator cards designed for data center and high-performance computing (HPC) applications, playing an important role in AI training, data analysis, and scientific computing.

The A100-80G is a GPU based on NVIDIA's Ampere architecture, equipped with 80GB of HBM2E memory, specifically designed for servers. The A100-80G (SXM) (Socket 3) version is typically used for high-performance computing systems installed directly on server motherboards. The A100-80G (PCIE) is designed for standard PCI Express interfaces and can be installed in the PCIe slots of most modern servers.

The H100-80G is NVIDIA's next-generation accelerator card for AI and high-performance computing, manufactured based on a 4nm process, with 80 billion transistors and 18,432 cores, providing higher performance and energy efficiency. The 80GB memory version of H100 offers higher data transfer speeds and is one of the most powerful AI accelerators on the market.

Website: www.conevoelec.com

Email: info@conevoelec.com